Hello,

In my previous post, I showed how to see USED and PEEKED bind variables from SQL Monitor views, either in memory and/or from AWR repository.

In this new one, I show how to see these same values from Execution Plans (either GV$SQL_PLAN and/or DBA_HIST_SQL_PLAN) as well as from Captured SQL binds (either from GV$SQL_BIND_CAPTURE and/or DBA_HIST_SQLBIND).

So, here is the same test case I’ve created in the previous post:

create table t (

col_n number, col_ch char, col_vc varchar2(10),

col_dt date, col_ts timestamp);

Table created.

define mydt="2023/10/01 12:01:12"

define myts="2023/10/01 12:01:12.123"

insert into t values (

1, 'A', 'Edu$!123', to_date('&mydt','yyyy-mm-dd hh24:mi:ss'), to_timestamp('&myts','yyyy-mm-dd hh24:mi:ss.ff'));

1 row created.

Now let’s run a query with binds:

alter session set nls_date_format='yyyy-mm-dd hh24:mi:ss';

alter session set nls_timestamp_format='yyyy-mm-dd hh24:mi:ss.ff';

set serveroutput on

declare

n number;

c char;

vc varchar2(10);

dt date;

ts timestamp;

function run_query

return number is

rn number;

begin

select COL_N

into rn

from t

where COL_VC!='MYQUERY3'

and COL_N = n

and COL_CH = c

and COL_VC like vc

and COL_DT = dt

and COL_TS = ts

;

return rn;

exception when no_data_found then return (-1);

end;

begin

n := 1;

c := 'A';

vc := 'Edu%';

dt := to_date('&mydt','yyyy-mm-dd hh24:mi:ss');

ts := to_timestamp('&myts','yyyy-mm-dd hh24:mi:ss.ff');

dbms_output.put_line(run_query);

n := 2;

c := 'B';

vc := 'ABC%';

dt := sysdate;

ts := systimestamp;

dbms_output.put_line(run_query);

end;

/

1

-1

Let me find the SQL_ID of my query:

select sql_id, executions, sql_text

from v$sql

where sql_text like q'[SELECT %COL_VC!='MYQUERY3'%]';

SQL_ID EXECUTIONS SQL_TEXT

------------- ---------- ------------------------------------------------------------

fb3gqgd87ac8h 2 SELECT COL_N FROM T WHERE COL_VC!='MYQUERY3' AND COL_N = :B5

1 row selected.

Now, here is the script to get the bind values from the SQL Plans:

col bind_name format a10 wrap

col peeked_value format a40

col datatype head "DATA_TYPE" for a20

col src for a9

def sqlid="fb3gqgd87ac8h"

def datini=sysdate-1

def datfin=sysdate

select src, INST_ID, sql_id, plan_hash_value, child_number, c.bind_name, c.bind_pos, c.bind_type,

decode(bind_type,1,'VARCHAR2',2,'NUMBER',12,'DATE',96,'CHAR',180,'TIMESTAMP',181,'TIMESTAMP WITH TZ',231,'TIMESTAMP WITH LTZ',to_char(bind_type)) datatype,

case

when c.bind_type = 1 /*varchar2*/ then utl_raw.cast_to_varchar2(c.bind_data)

when c.bind_type = 2 /*number*/ then to_char(utl_raw.cast_to_number(c.bind_data))

when c.bind_type = 96 /*char*/ then to_char(utl_raw.cast_to_varchar2(c.bind_data))

when c.bind_type = 12 /*date*/ then TO_CHAR(dbms_stats.convert_raw_to_date(c.bind_data),'yyyy-mm-dd hh24:mi:ss')

when bind_type = 180 /*timestamp*/ then

to_char(

to_timestamp(

to_char(

dbms_stats.convert_raw_to_date(hextoraw(substr(bind_data,1,14)))

,'yyyy-mm-dd hh24:mi:ss')

,'yyyy-mm-dd hh24:mi:ss')

+ numtodsinterval(nvl(to_number(hextoraw(substr(bind_data,15,8)),'XXXXXXXX')/1e9,0), 'SECOND')

,'yyyy-mm-dd hh24:mi:ss.ff9')

else 'Not printable'

end as peeked_value

from (

select 'SQLPLAN-M' src, sql_id, plan_hash_value, OTHER_XML, child_number, INST_ID

from gv$sql_plan

UNION ALL

select 'SQLPLAN-H' src, sql_id, plan_hash_value, OTHER_XML, to_number(NULL) as child_number, to_number(NULL) as INST_ID

from dba_hist_sql_plan

join dba_hist_snapshot using (DBID)

where END_INTERVAL_TIME between &datini and &datfin

) p,

xmltable

(

'/*/peeked_binds/bind' passing xmltype(p.other_xml)

columns

bind_name varchar2(30) path './@nam',

bind_pos number path './@pos',

bind_type number path './@dty',

bind_data raw(2000) path '.'

) c

where sql_id = '&sqlid'

and p.other_xml is not null

order by src, inst_id, sql_id, plan_hash_value, child_number, bind_pos, peeked_value

/

SRC In SQL_ID PLAN_HV CHILD_NUMBER BIND_NAME BIND_POS BIND_TYPE DATA_TYPE PEEKED_VALUE

--------- --- ------------- ------------ ------------ ---------- ---------- ---------- -------------------- ----------------------------------------

SQLPLAN-M 1 fb3gqgd87ac8h 1601196873 0 :B5 1 2 NUMBER 1

SQLPLAN-M 1 fb3gqgd87ac8h 1601196873 0 :B4 2 96 CHAR A

SQLPLAN-M 1 fb3gqgd87ac8h 1601196873 0 :B3 3 1 VARCHAR2 Edu%

SQLPLAN-M 1 fb3gqgd87ac8h 1601196873 0 :B2 4 12 DATE 2023-10-01 12:01:12

SQLPLAN-M 1 fb3gqgd87ac8h 1601196873 0 :B1 5 180 TIMESTAMP 2023-10-01 12:01:12.123000000

5 rows selected.

NOTE: you can get peeked values from SQL Plans direct in your execution plan. Maria Colgan explains how you can do that here.

And here is the script to get the bind values from the Captured binds:

col bind_name format a10 wrap

col captured_value format a40

col DATATYPE_STRING for a20

col src for a9

def sqlid="fb3gqgd87ac8h"

def datini=sysdate-1

def datfin=sysdate

select DISTINCT src, INST_ID, sql_id, plan_hash_value, child_number,

name as bind_name, position as bindpos, DATATYPE_STRING,

case

when DATATYPE in (1,2,96) /*varchar2,number,char*/ then VALUE_STRING

when DATATYPE = 12 /*date*/ then to_char(anydata.accessDate(value_anydata),'yyyy-mm-dd hh24:mi:ss')

when DATATYPE = 180 /*timestamp*/ then to_char(anydata.accesstimestamp(value_anydata),'yyyy-mm-dd hh24:mi:ss.ff9')

else 'Not printable'

END captured_value

from (

select 'CAPTURE-M' src,

sql_id, child_number, PLAN_HASH_VALUE, INST_ID,

name, position, datatype, DATATYPE_STRING, value_string, value_anydata

from gv$sql_bind_capture

join gv$sql s using (INST_ID, sql_id, child_number)

UNION ALL

select 'CAPTURE-H' src,

sql_id, to_number(NULL) as child_number, to_number(NULL) PLAN_HASH_VALUE, INSTANCE_NUMBER as INST_ID,

name, position, datatype, DATATYPE_STRING, value_string, value_anydata

from dba_hist_sqlbind

join dba_hist_snapshot using (DBID, INSTANCE_NUMBER, SNAP_ID)

where END_INTERVAL_TIME between &datini and &datfin

) c

where sql_id = '&sqlid'

order by src, INST_ID, sql_id, plan_hash_value, child_number, position, captured_value

/

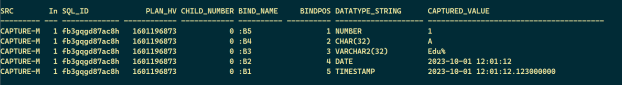

SRC In SQL_ID PLAN_HV CHILD_NUMBER BIND_NAME BINDPOS DATATYPE_STRING CAPTURED_VALUE

--------- --- ------------- ------------ ------------ ---------- ---------- -------------------- ----------------------------------------

CAPTURE-M 1 fb3gqgd87ac8h 1601196873 0 :B5 1 NUMBER 1

CAPTURE-M 1 fb3gqgd87ac8h 1601196873 0 :B4 2 CHAR(32) A

CAPTURE-M 1 fb3gqgd87ac8h 1601196873 0 :B3 3 VARCHAR2(32) Edu%

CAPTURE-M 1 fb3gqgd87ac8h 1601196873 0 :B2 4 DATE 2023-10-01 12:01:12

CAPTURE-M 1 fb3gqgd87ac8h 1601196873 0 :B1 5 TIMESTAMP 2023-10-01 12:01:12.123000000

5 rows selected.

And that’s it for today.

See you next time!